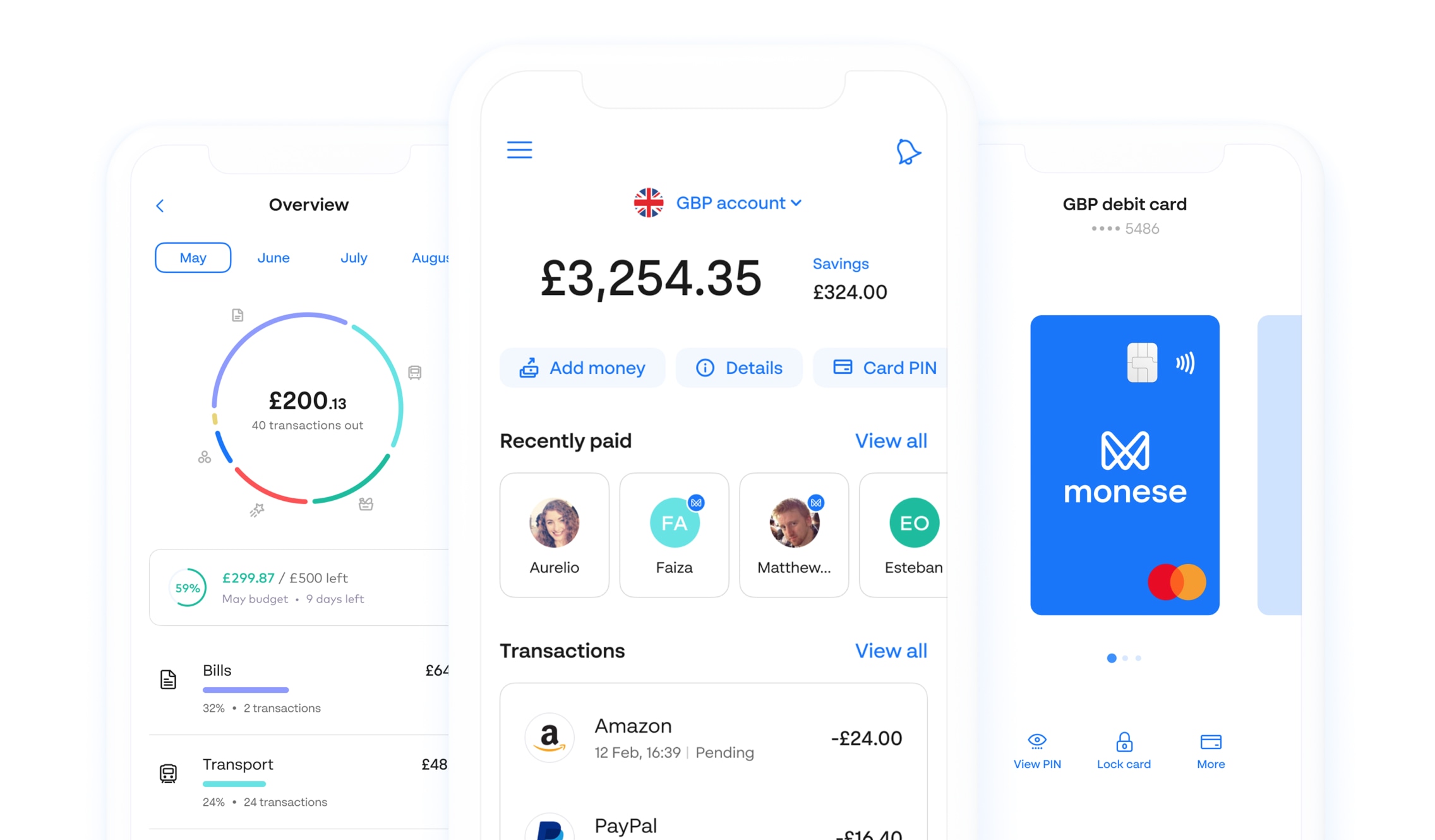

A UX framework is a set of agreed metrics to be tracked and measured, when testing the Monese product with real users. At Monese we collect this data to paint a full picture of what our customer experience looks like. We can then understand and measure success of our efforts, set goals and identify opportunities to improve the experience.

At Monese, I setup and lead an ongoing initiative to measure and understand how our users are experiencing their interactions with the product. Being a new process for the company, this was an exciting opportunity to help us move to a more more user focussed process.

Working with product designers and researchers, I conducted a wide ranging project to research and understand our usage data, choose how best to process it and establish a consistent testing process for all parts of the UX.

My Role

Process planning

And researching practices

Internal rollout

And stakeholder management

User research

And sharing results

Data analysis

In collaboration with BI

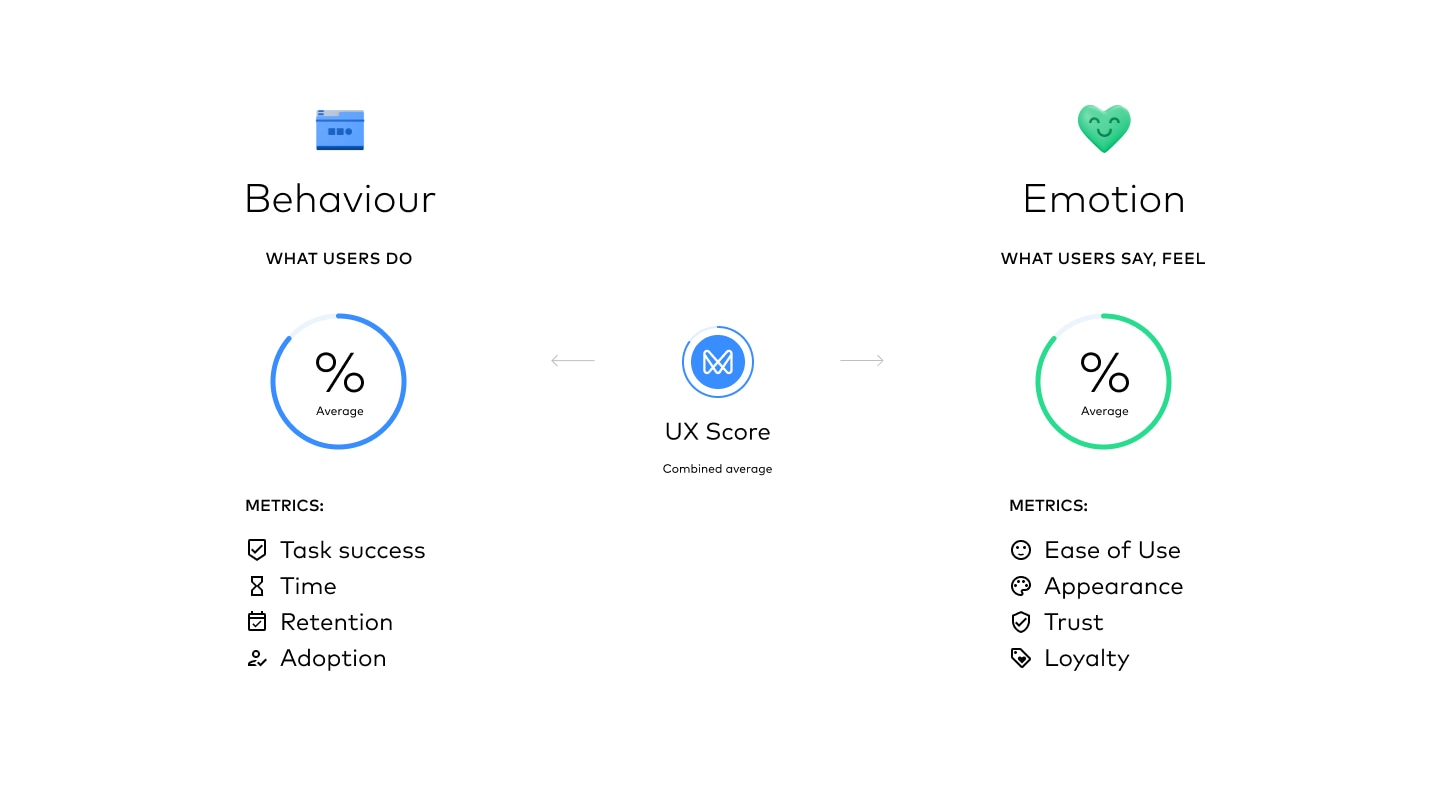

To begin measuring the experience we need to understand how users are feeling when they’re using the product, and what they’re doing when they use it. The two main areas of “User behaviour” and “User emotion”. The initial part of this process involved benchmark scoring all aspects of the product.

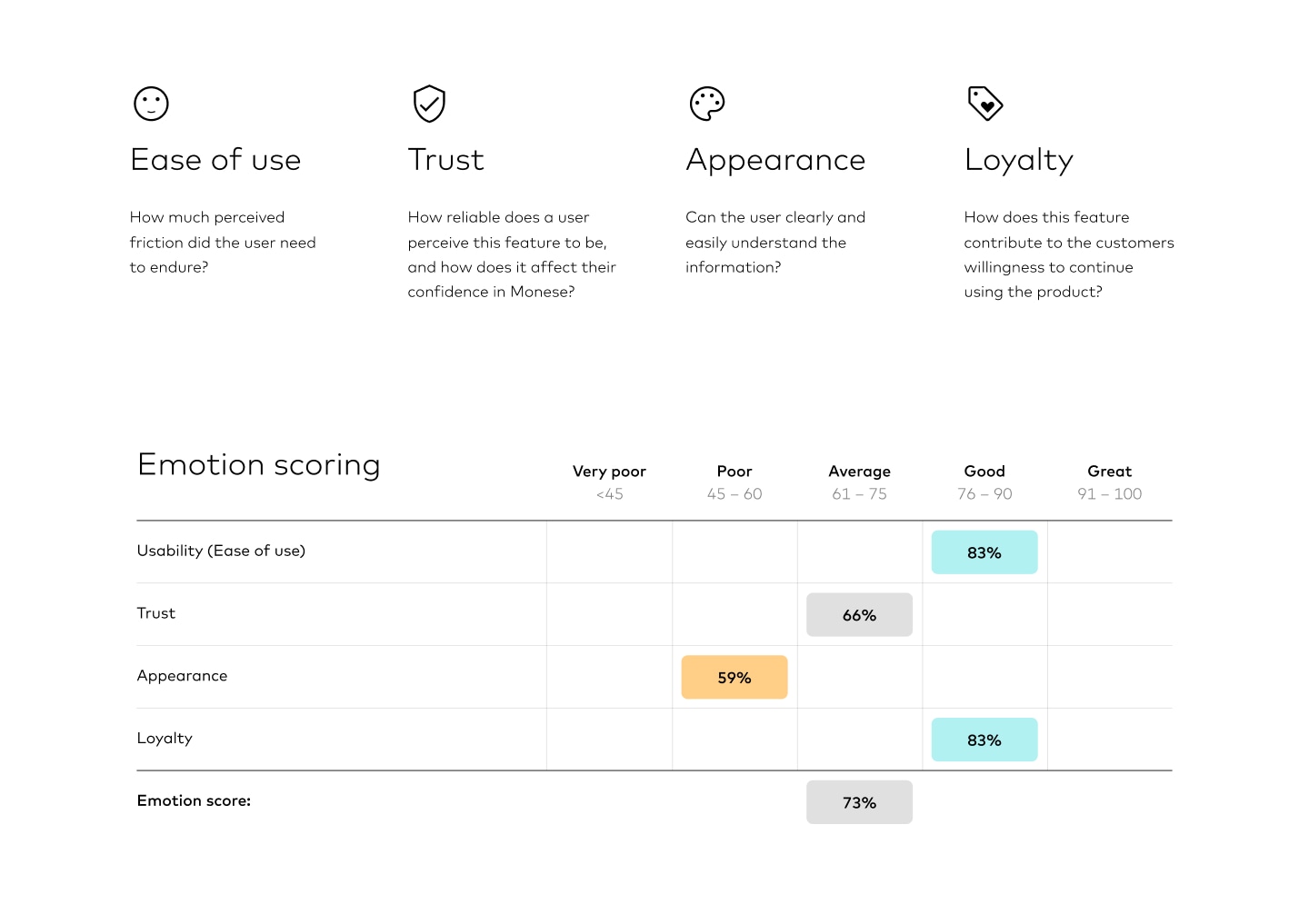

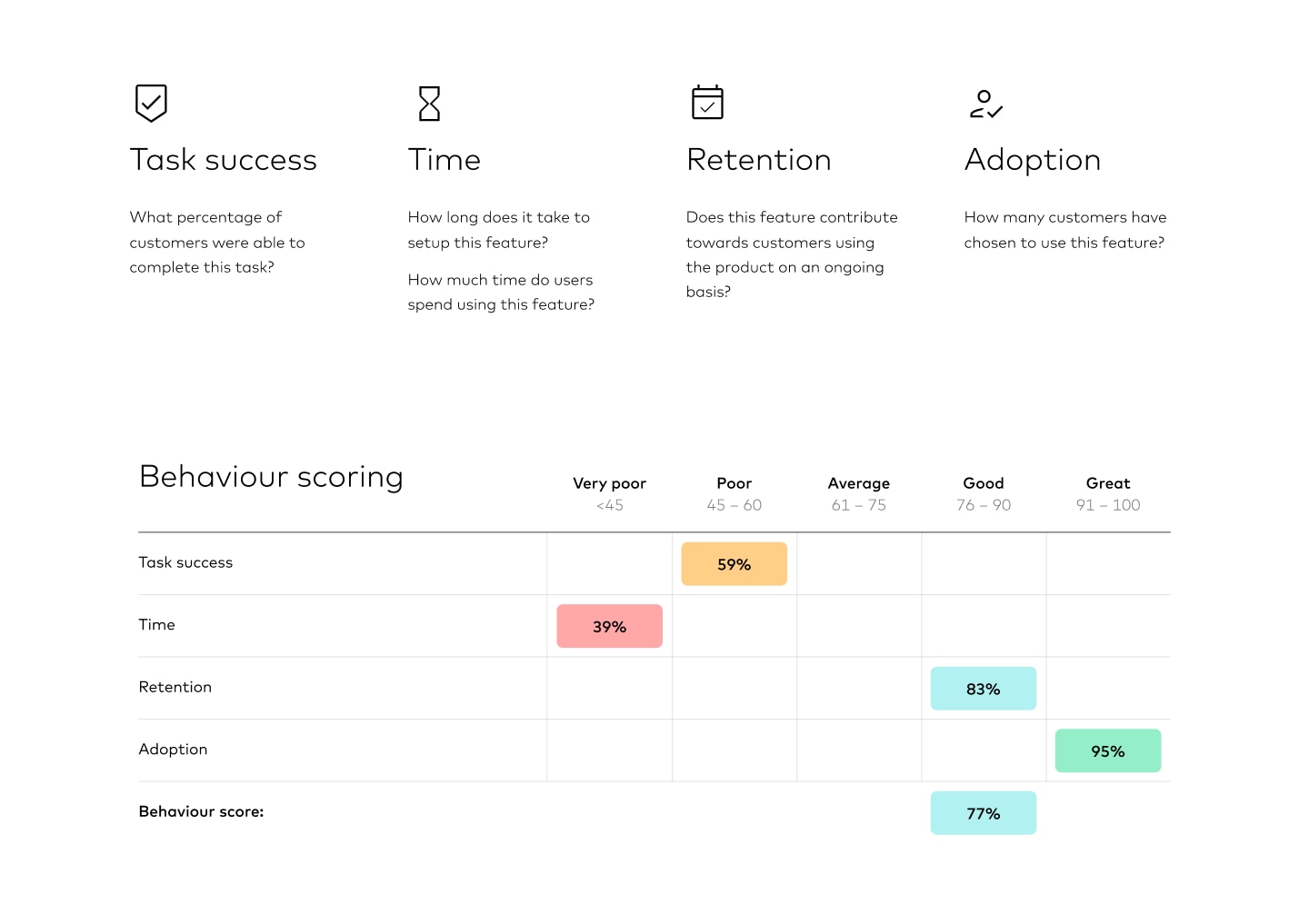

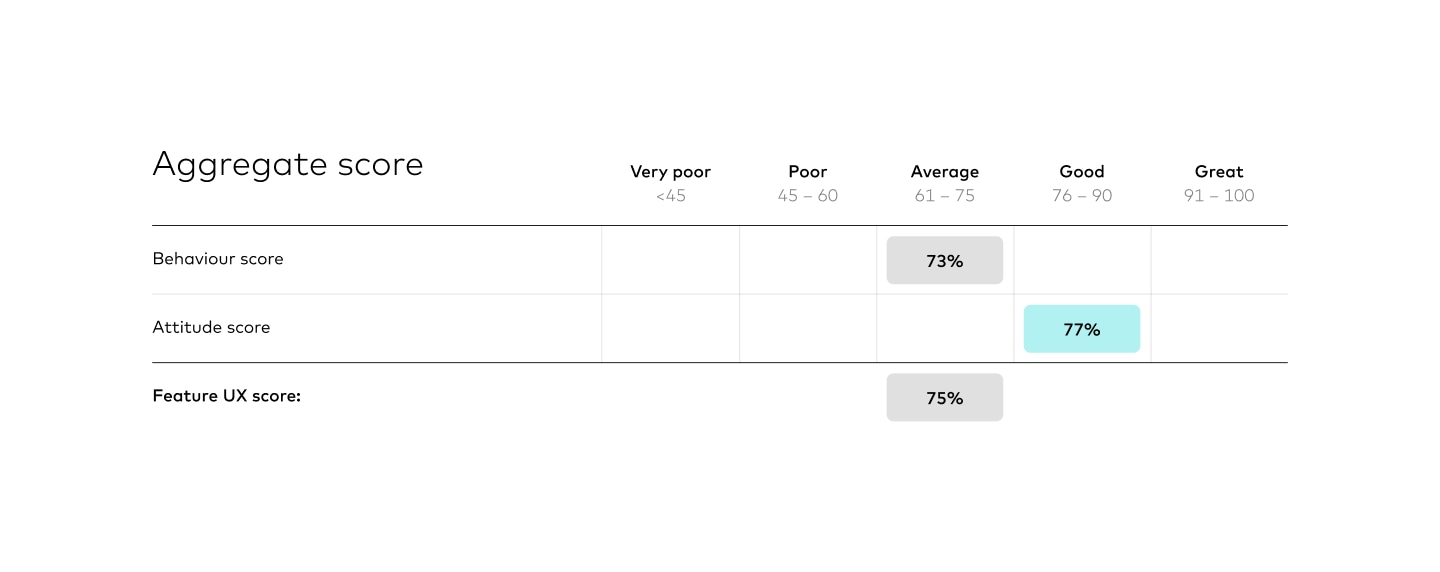

We track a series of metrics from our users behavioural and emotional experience, and combine the results to generate a single score for each feature.

To understand how users are perceiving the experience, we track emotional metrics. The user flow may be performing well, but if users are frustrated or don’t trust it, they will be more likely to stop using it.

We need to measure how users behave with the application to understand how effectively they are performing tasks within the app.

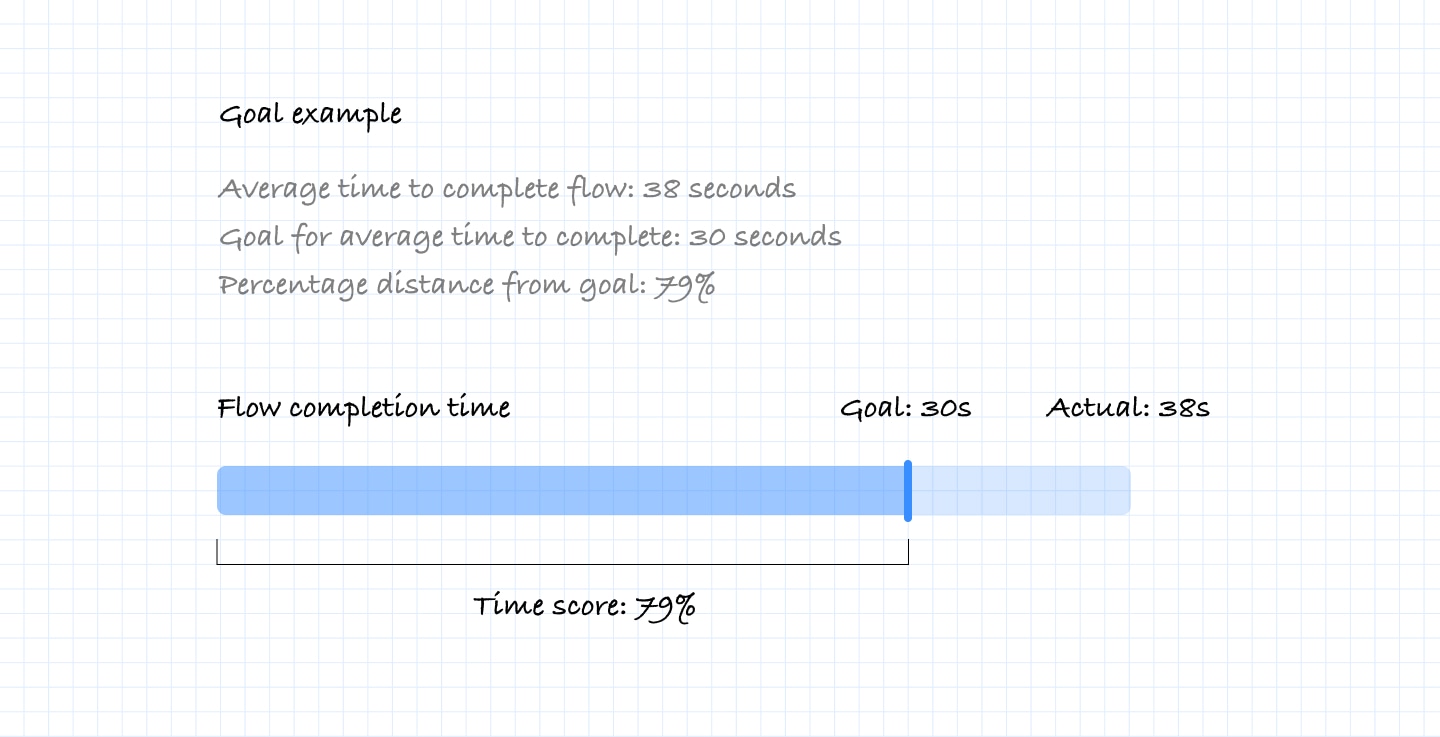

To be able to create a score for some of the metrics such as time, we needed to set goals in these areas to understand how far away we are from the goal, and score based on the difference from reaching the set goal.

This process was really useful for us as it helped to generate a lot of internal discussion within product teams about what the goal should be, and how they could improve the product to reach it.

By combining the overall behavioural and emotional scores, we can get a single score for each key feature/user story, and understand how the score has changed over time.

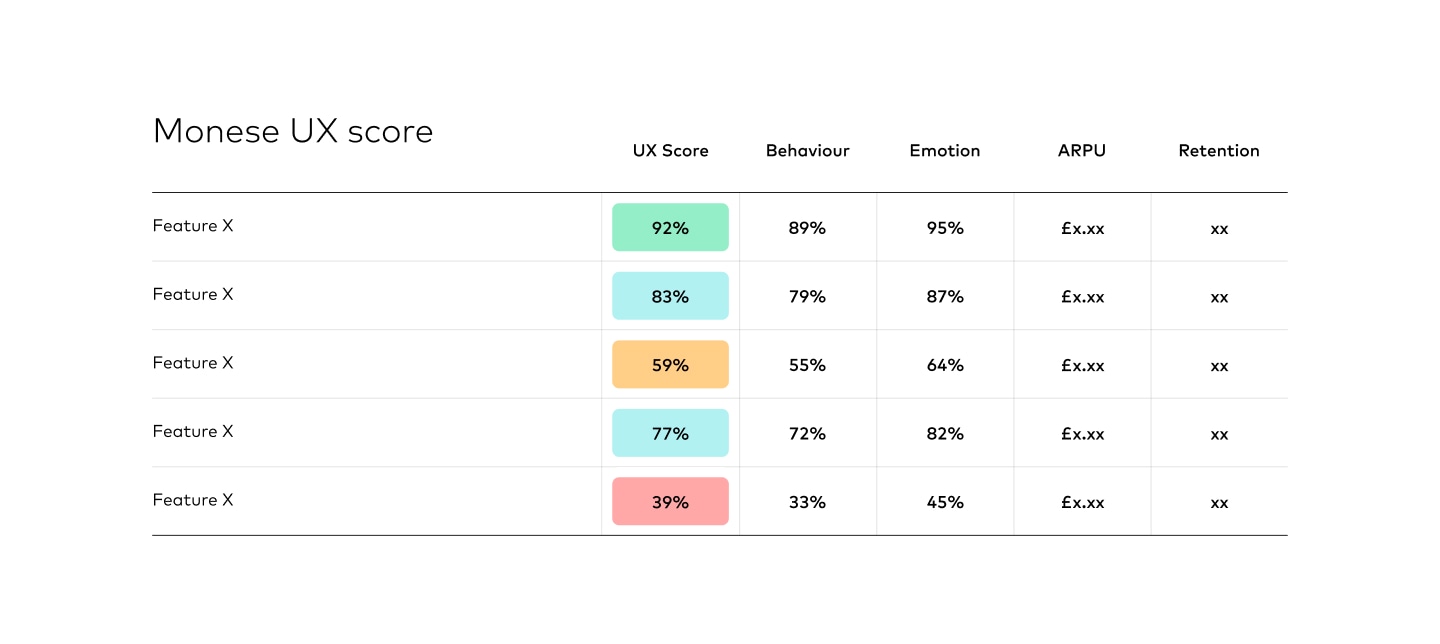

When can we then feed this feature score into a matrix of all the features we’ve tested across the product, and understand how it compares to other experiences.

When we compare at all of the features we’ve tested across the overall product, we can get a sense of which parts of the experience are less or more positive than others.

With this exerience score overview of the full product, we can then make decisions on which experiences to improve, based on their overall score, how frequently they’re used, and their impact on other key business metrics such as ARPU and retention.

We’ve found this process to also help build more of an internal focus on the customer experience, sparking more discussion while we research, analyse and look for solutions to improve our UX.